This article is at least a year old

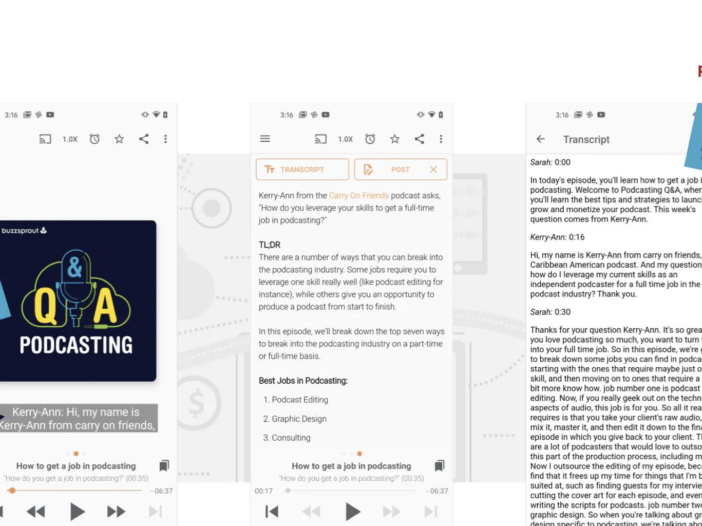

Buzzsprout, one of the world’s largest podcast hosting platforms, has announced the launch of a new feature set that allows podcasters to easily distribute their own episode transcripts to listeners.

This toolset is a huge step forward in improving the accessibility of podcasts for the hearing impaired, as well as enhancing the end listener’s experience by allowing them to read along while listening. Additionally, transcription is critical to search engine optimization (SEO) and can boost podcasters’ ability to get discovered and grow their audiences.

“We love podcasts and are in a good position to provide solutions to some of the growing pains this industry is facing,” said Kevin Finn, Co-Founder of Buzzsprout. “Discoverability and accessibility are at the top of our list. It’s been encouraging to see app developers share our enthusiasm for this solution.”

Podcast Addict, a very popular podcast listening app on Android, is the first to integrate Buzzsprout’s new transcript distribution toolset into its app, providing its listeners with synchronized captions and a way to view the entire transcript from within the app. Buzzsprout is working closely with others in the industry and expects to announce additional integrations in the coming weeks and months.

“I’m thrilled to bring transcripts to Podcast Addict listeners. A big thanks to Buzzsprout for adding this in their feeds and for providing the implementation specs. This will benefit both listeners and podcasters,” said Xavier Guillemane, Developer of Podcasts Addict. “When people see this, they are going to want it available everywhere.”

The launch of this transcription toolset is a culmination of years of work. Buzzsprout is currently distributing over 46,000 transcripts with hundreds more being published through the platform every day. For more information on the importance of podcast transcripts and how you can add them to your own podcast or listening application, visit the Buzzsprout Blog.

Buzzsprout is the premier podcast hosting platform for new and experienced podcasters. Having helped over 300,000 podcasters start their own podcast, Buzzsprout is a trusted platform that takes away the complexity of starting a podcast and provides ease of use, educational tips, and a modern toolset to help your show stand out. Buzzsprout is one of the largest and fastest-growing hosting platforms, offering everything podcasters need to publish on Apple Podcasts, Spotify, Google Podcasts, Podcast Addict, and hundreds of other podcast-listening apps. To learn more, visit www.buzzsprout.com.

This is a press release which we link to from Podnews, our daily newsletter about podcasting and on-demand. We may make small edits for editorial reasons.

Companies mentioned above:![]() Apple

Apple![]() Apple Podcasts

Apple Podcasts![]() Buzzsprout

Buzzsprout![]() Google

Google![]() Google Podcasts

Google Podcasts![]() Spotify

Spotify

Google Podcasts Manager – Why Podcasters Need This – Search Engine Journal

Join this leadership discussion for proven techniques to build long-term relationships and keep your clients coming back.

Discover the latest trends, tips, and strategies in SEO and PPC marketing. Our curated articles offer in-depth analysis, practical advice, and actionable insights to elevate your digital marketing efforts.

In this guide, industry experts explore the challenges and opportunities presented by the onslaught of AI tools, and provide actionable tips for thriving amid these shifts.

Discover the latest trends, tips, and strategies in SEO and PPC marketing. Our curated articles offer in-depth analysis, practical advice, and actionable insights to elevate your digital marketing efforts.

Join three of Reddit’s top executives in this exclusive AMA (Ask Me Anything) to discover how you can tap into Reddit’s unique platform to drive brand growth.

Join this leadership discussion for proven techniques to build long-term relationships and keep your clients coming back.

Google announced a service that helps podcasters gain more listeners from Google and get listener analytics data.

Google Webmasters Twitter account announced a new feature called Podcasts Manager. Podcasts Manager provides analytics data about listeners but also helps podcasters manage their podcasts for Google search.

The tweet described Podcasts Manager like this:

“Podcasters can see impressions and clicks for Google Podcasts results that appear in Search, as well as top discovered episodes and search terms that led to their podcast.”

They also announced a new forum community where podcasters can turn to each other for help.

“For podcasters curious about how to optimize their podcast for Google, we’ve put together a few pointers in our newly launched Podcasts Manager forum”

The purpose for Google Podcasts Manager is to provide podcasters with the insights to understand which podcasts do well, how much of the podcast is listened to, and to help Google show the podcasts across a wide range of its services, like Google Search, Google Home and Android for Auto.

Google Podcasts Manager provides analytics data related to the popularity of the podcasts with listeners.

Information provided includes:

The above is a partial list of the kind of data Google will show a podcaster.

Google only collects data from Google properties. Google collects and shows you the data only when a listener discovers your podcast through a Google service or app.

Google will show your podcast to interested listeners across a variety of devices.

This is where Google says it will show your podcast:

“Google Search on all browsers, desktop and mobile. Users can play your episodes in the browser (sample Google Search result shown below).

Google Search App for Android (requires v6.5 or higher of the Google Search App)

Google Podcasts app for mobile devices

Google Home speaker system

Content Action for the Google Assistant

Android Auto in your car”

Being able to manage a podcast in Google, to improve how it is shown across all of Google is a good deal because it will help the podcast gain more listeners. Additionally, the service will help podcasters better understand which podcasts do well and which don’t, which can help podcasters give their listeners the kind of content they want to hear.

Podcasters can see impressions and clicks for Google Podcasts results that appear in Search, as well as top discovered episodes and search terms that led to their podcast.

— Google Webmasters (@googlewmc) October 13, 2020

Google Podcasts Manager Page

Google Podcasts Manager Sign Up Page

Google Podcasts Manager Forum

Podcasts Manager Forum: Pointers on Optimizing Podcasts for Google

I have 25 years hands-on experience in SEO, evolving along with the search engines by keeping up with the latest …

Conquer your day with daily search marketing news.

Join Our Newsletter.

Get your daily dose of search know-how.

In a world ruled by algorithms, SEJ brings timely, relevant information for SEOs, marketers, and entrepreneurs to optimize and grow their businesses — and careers.

Copyright © 2024 Search Engine Journal. All rights reserved. Published by Alpha Brand Media.

Google: No SEO Benefit to Audio Versions of Text Posts – Search Engine Journal

Join this leadership discussion for proven techniques to build long-term relationships and keep your clients coming back.

Discover the latest trends, tips, and strategies in SEO and PPC marketing. Our curated articles offer in-depth analysis, practical advice, and actionable insights to elevate your digital marketing efforts.

In this guide, industry experts explore the challenges and opportunities presented by the onslaught of AI tools, and provide actionable tips for thriving amid these shifts.

Discover the latest trends, tips, and strategies in SEO and PPC marketing. Our curated articles offer in-depth analysis, practical advice, and actionable insights to elevate your digital marketing efforts.

Join three of Reddit’s top executives in this exclusive AMA (Ask Me Anything) to discover how you can tap into Reddit’s unique platform to drive brand growth.

Join this leadership discussion for proven techniques to build long-term relationships and keep your clients coming back.

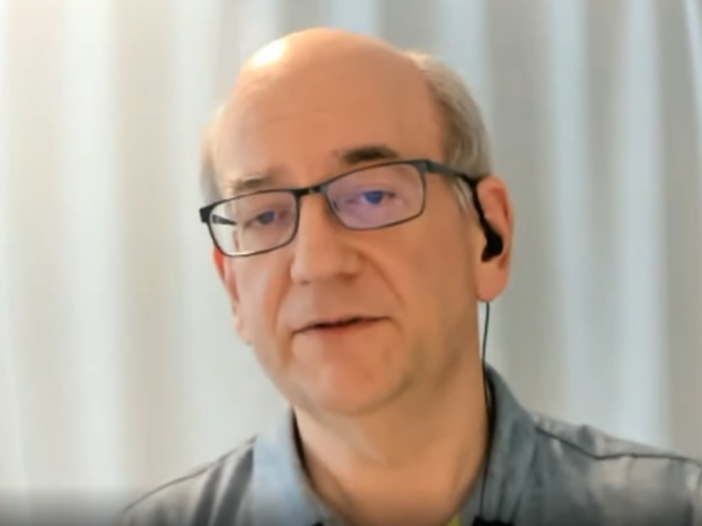

Providing an audio recording of a text-based web page does not improve SEO, says Google’s John Mueller.

Google’s John Mueller says there’s no inherent SEO benefit to adding an audio version of a text-based web page.

This topic is discussed during the Google Search Central SEO hangout recorded on February 12.

The following question is submitted to Mueller:

“Would adding an audio version of a page’s content help with search in any way? Other than the obvious accessibility improvement.”

Adding an accompanying audio recording to a written post is something more publishers have been doing as of late.

Is it helping those publishers in search rankings?

Here’s what Mueller has to say.

Unlike photo and video, Google doesn’t do anything special with audio content.

Audio content is not processed separately by Google. At most it might be seen as a piece of video content which could result in a video snippet.

Mueller says, as far as what he knows, adding an audio recording to a text post does not help or hurt rankings.

“As far as I know we don’t do anything with audio versions of content. We also wouldn’t see that as duplicate content, so it’s not that you have to avoid that.

I mean, duplicate content itself isn’t something you really have to avoid, but even if you wanted to avoid the situation that you’re suddenly ranking for the same things with different pieces of content, the audio version is something that we, as far as I know, would not even process separately.

At most we might see that as a piece of video content and show that also with a video snippet. But, essentially, it wouldn’t help or detract from a page’s overall ranking.”

Mueller is about to move on to another question when an SEO named Robb Young jumps in to ask for clarification.

He asks: Doesn’t adding an audio recording do anything for SEO?

Is a page not seen as higher quality when it has text and audio as compared to just text?

Mueller stays firm on the answer he originally provided. Google does not view a page as higher quality because it has multiple types of content.

However, there could be indirect benefits such as the page getting shared around more.

“I don’t think we would look at that and say: “oh there are different kinds of content here, it’s a better page because of that.”

It might be that there are indirect effects, like if users find this page more useful and they recommend it more, that’s something that could have an effect.

But it’s not the case that we look at the types of content on a page and say: “oh there’s two types versus five types, the one with five types is better.””

Lastly, Mueller adds there are benefits associated with adding images and/or video to a web page because those content types can each rank independently.

There’s no separate set of search rankings for audio content.

“I think it’s a bit different with video and images in that images and video themselves can rank independently. Like in image search and video search you can have the same piece of content be visible in those other surfaces. But for audio we don’t really have a separate audio search where that page could also rank.

The closest that could come there is the podcast search that we have, or the podcast one box thing, but that’s really tied to the podcast content type where you have a feed of podcast information and we can index it like that. But just having audio on a page by itself, I don’t think that would change anything automatically in our systems.”

Hear the full question and answer below:

Matt G. Southern, Senior News Writer, has been with Search Engine Journal since 2013. With a bachelor’s degree in communications, …

Conquer your day with daily search marketing news.

Join Our Newsletter.

Get your daily dose of search know-how.

In a world ruled by algorithms, SEJ brings timely, relevant information for SEOs, marketers, and entrepreneurs to optimize and grow their businesses — and careers.

Copyright © 2024 Search Engine Journal. All rights reserved. Published by Alpha Brand Media.

How Can a Podcast Increase SEO? + 13 Podcast SEO Tips – Rev

Forget meeting fatigue, missed details, and tedious tasks. VoiceHub will change the way you work. Coming soon.

Forget meeting fatigue, missed details, and tedious tasks. VoiceHub will change the way you work. Coming soon.

Forget meeting fatigue, missed details, and tedious tasks. VoiceHub will change the way you work. Coming soon.

Forget meeting fatigue, missed details, and tedious tasks. VoiceHub will change the way you work. Coming soon.

Team of one. Global operation. Everything in between. We’ve got it all covered. Rev handles the tedious job of transcripts, captions, and subtitles so you’ll get work done faster. If you haven’t found what you need yet, we still bet we can help.

Team of one. Global operation. Everything in between. We’ve got it all covered. Rev handles the tedious job of transcripts, captions, and subtitles so you’ll get work done faster. If you haven’t found what you need yet, we still bet we can help.

Podcast transcription can diversify your content and improve your website's SEO, making your content more accessible and discoverable.

Luckily for you, we deliver. Subscribe to our blog today.

A confirmation email is on it’s way to your inbox.

Podcasts are an effective way to reach new audiences and bring visibility to your brand. Diversifying your content with podcast transcription will help you improve your website’s SEO and grow your audience.

Transcription offers your listeners the best of audio and written content. It makes your content more accessible and improves your site’s Google Search rankings. Let’s look into the importance of SEO and how you can use podcast audio transcription to boost SEO.

Like other digital marketing activities, It’s not enough to record your podcast, publish it, and hope for the best. You shouldn’t rely on shares from your existing audience to grow your reach. SEO helps you reach an audience outside of your immediate marketing efforts by making your content more discoverable through search engines. The better your SEO ranking is, the more likely a search engine is to pick up your content and rank it for certain keywords people may search.

SEO is important, but your podcast audio can’t be optimized on its own. You need to transcribe the audio file to get the benefits.

Here’s how your podcast transcripts actually help boost your SEO:

Finding the right keywords is a key aspect of optimizing your podcast with a text transcript. Keywords show Google that your podcast episode relates to user searches. This increases the chances of it showing up in search results.

Keywords are not the only significant factor in search engine rankings. Being seen as an authority in your niche also helps drive traffic to your content.

Podcast transcriptions make it easier for authoritative sites to link to you, especially if they want to call out something specific from the podcast without skipping through the entire recording. Backlinks allow you to build SEO and reach new audiences.

Audio is not an ideal medium for everyone—for example, those who are Deaf or Hard of Hearing, or those whose first language is not English. Transcripts make your content more accessible to more people and improve the user experience. They also make the content more easily searchable for those who may want to use your podcast material as a resource or backlink.

Now that you know the benefits, here are some ways to improve your podcast SEO and increase your podcast episodes’ reach.

Consider topics and keywords your potential audience may be interested in before recording. Plan your podcast episodes in order to optimize for relevant keywords. Then your podcast transcripts will automatically be optimized for SEO and improve the quality of content on your website.

Keywords are important, but podcast hosts need to mix it up. Use one main keyword per episode. This keyword should have low competition and high search volume to improve your chances of ranking. You can use various SEO tools to help you with this process.

The title of your podcast is like the subject line of an email. If it’s not good, no one will click on it. Appealing titles will encourage people to listen and will keep them coming back. For example, if your podcast focuses on productivity, make sure your title reflects that.

Transcripts are time consuming to produce manually and often costly to order, which may deter some podcasters from pursuing this content strategy. Rev provides quick and affordable transcripts that help bring better SEO within reach.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.

Ordered list

Unordered list

Text link

Bold text

Emphasis

Superscript

Subscript

Lectus donec nisi placerat suscipit tellus pellentesque turpis amet.

Sign up to get Rev content delivered straight to your inbox.

Audio SEO Best Practices: How to Optimize for Audio Search – Voices.com

How people find your podcast in apps – who indexes what? – Podnews

This article is at least a year old

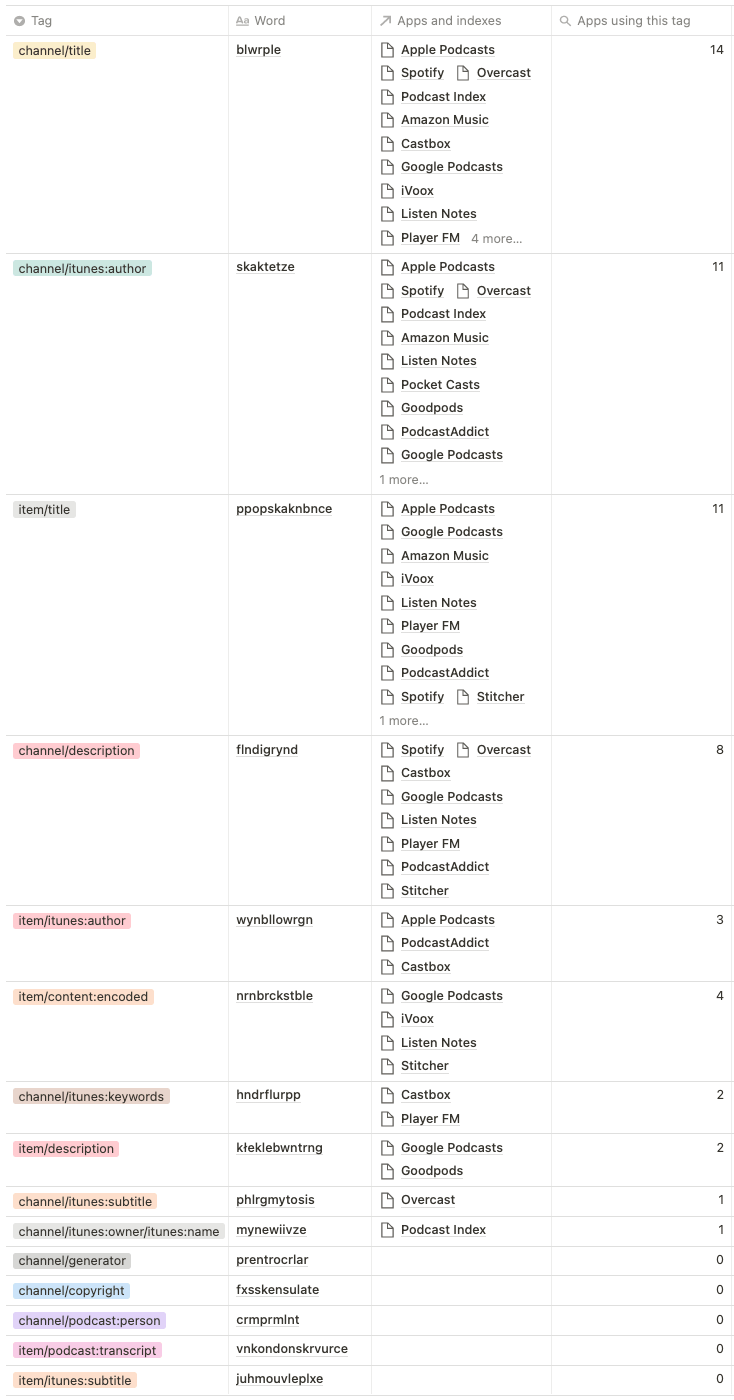

Podcast metadata spans the spectrum from the minimal and functional to the obscure and mysterious to the highly-optimised and keyword-stuffed. For some, a title can be another form of creative expression, whereas for many – especially those podcasting for business, or with plans to monetise – it can feel like essential groundwork in garnering new listeners from search.

For years, the prevailing wisdom has been that your podcast name and episode titles are the biggest factor, but presumably a good, detailed but not over-long description can help capture some of those search terms that are relevant but would be too spammy to put in the title?

Well, sort of. The truth is that’s pretty much never been the case for Apple Podcasts, which only cares about your titles and your author tags. But what about the increasing number of equally-important or otherwise complementary podcast directories? Where do we need to focus our efforts if we want episodes to surface for relevant search terms, and how can we avoid filling our titles with word salad?

I teamed up with James, the editor of Podnews, to perform some experiments on a couple of our feeds we knew wouldn’t cause waves of confusion were we to stuff them full of nonsense words in the name of science. So James updated the metadata for his Podclock podcast, and I did the same with a now-moribund feed.

The idea was to pick a different nonsense word for each relevant podcast-related tag in our RSS feeds, and to see which apps picked up which words.

For our experiment, we limited the list of apps to

This list represents the most popular podcast apps, and all podcast apps with more than 1% market share according to typical podcast host reports.

These are the tags in a podcast’s RSS feed that relate to the podcast as a whole, not individual episodes. If your podcast covers one particular topic in-depth or you’re looking to build a community around a specific area of interest, your podcast-level metadata may be a key contributor to your success in search. So, let’s look at what podcast-level metadata is being indexed.

Unsurprisingly, a search on your podcast name will return your podcast in all apps. If it doesn’t, that probably means your title is too common. The word ‘Podcast’ in your title probably doesn’t help your SEO either, since Podcast Index data suggests that more than 600,000 shows have that word in the title.

Castbox, Google Podcasts, Listen Notes, Player FM, PodcastAddict, Spotify, and Stitcher all add your podcast description to their indexes, so info you add here will surface in search. Overcast’s web search will also surface info from your description, but crucially this does not apply to the app.

That means Apple Podcasts, Amazon Music and Pocket Casts do not index your podcast’s description.

The new Podcasting 2.0 tag for storing info about who contributes to a podcast is not being used in any search engine we surveyed. This includes the Podcast Index itself.

I’m a little sad about this – even if not surprised to see it snubbed by established apps – as guest and host names feel like something people would want to search on. However, past conversations with the tech team at Podchaser have led me to understand these tags are ripe for spammers, who’ll put any old famous name in there in the hopes it’ll result in more downloads.

This isn’t to suggest the tag has no value. Far from it. Just because the names aren’t being surfaced in search results doesn’t mean it’s not a great way to link podcasts together, but I’d love to see it influence results for guest-based podcasts. Still, early days.

iVoox, Player FM and Stitcher were the only apps not to index this tag.

Again, the only time this came up was in Overcast’s web search, but not in the app. That essentially means this tag is not being indexed in any meaningful way. Unsurprisingly so, since this tag has been removed from the Apple Podcasts RSS guide.

The only meaningful place this turned up was the Podcast Index website. Other than that, consider this tag for informational purposes only.

This tag is no longer in the Apple Podcasts RSS guide, which is understandable given that it has as much use as your website’s <meta> keyword tags. That said, CastBox and Player FM still index this tag, so if you just wanted to cater to users of those apps and those apps alone, knock yourself out.

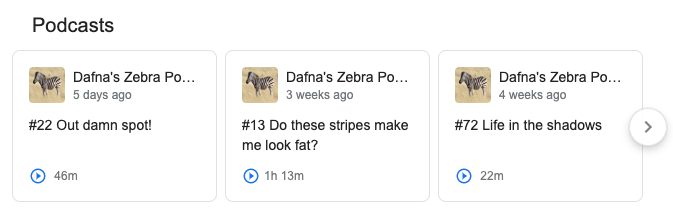

These are the tags that relate to each episode in your podcast. If you cover uniquely different topics or have interesting guests each episode, chances are this is the ground you’ll be fighting for in search, as if you can meet a prospective listener’s need with a specific and relevant episode, you might just have hooked a new subscriber. So let’s look at what episode-level metadata apps and directories are indexed.

The Podcast Index, Pocket Casts and Overcast are the only apps not to surface episode-level titles in search. What that shows me is that these apps are much more focused on discovery of podcast series – not individual episodes – by keyword.

Also, you need to tell CastBox that you want to search by episode, as its search engine isn’t smart enough to search both podcast-level and episode-level metadata at the same time.

The plain-text podcast description is only being indexed by Google Podcasts and GoodPods. I have been thinking it’s best ignoring this tag as apps can’t decide on whether to allow HTML or not, though recently support appears to be a little more consistent.

This is where your show notes live. However, Google Podcasts, iVoox, Stitcher, and Listen Notes are the only apps that index rich-text episode descriptions.

It may be that if <content:encoded> is present, aggregators ingest this information instead of the <description>, and this is what we’re uncovering here. This certainly happens when some apps display episode descriptions. This might need a little more investigation.

This deprecated tag is a wasteland. It’s not the only tag to garner no search results across the apps we surveyed, but it’s one of the few that surprises me a little, so I thought it worth mentioning.

Apple Podcasts, CastBox, and PodcastAddict are the only apps to index the episode-level author tag.

Unless you’re going to operate like the minority of podcasts that keyword-stuff their titles – which is a bit tacky and against Apple’s guidelines – I think it’s unwise to put too many eggs in the podcast-app search basket. Search is just not evolved enough within these apps to be meaningful.

CastBox and Google Podcasts are the hungriest apps, indexing the most amount of tags. Pocket Casts was surprising in that it appeared to index only the most basic of podcast-level metadata.

To me these findings highlight the need for good podcast websites. Compelling titles, rich and meaningful show notes, useful links, host and guest bios; all of these are useful for placement within Google and other web search engines because they’re useful to humans. But there are some gaps in podcast search that need to be filled, even if at the expense of letting in a little spam.

For what it’s worth, I don’t think keyword spam is a consideration being made by these apps. I think it’s simply that not enough time or effort has been put into good search database design. Many apps are powered by quite rudimentary relational databases that perform well for linking one table to another, but perform less well for full-text search.

For apps like Overcast, Pocket Casts, and Spotify, search seems to exist mainly to allow a podcast to be found by name, rather than by topic.

If you’re already working to best practises, nothing in these findings should change your behaviour. Maybe it’ll remove a couple of things from the pipeline, since it really doesn’t matter if you have a relevant set of keywords in your feed, for example.

As a podcast producer, this helps cement certain key points:

I have a Hitchhiker’s Guide to the Galaxy podcast. It’s called Beware of the Leopard, which is a well-known reference to a line in the first episode of the radio show (it’s also in the book, the TV show, and the film). But you wont find my podcast by searching for “hitchhikers guide to the galaxy” (in most apps at least). You might find it if you search one of the place or character names (like “arthur dent”), but only if we mentioned one of them in the episode title.

This is obviously a failing on my part, since “Beware of the Leopard – the Hitchhiker’s Guide to the Galaxy podcast” isn’t an unreasonably-long or thirsty name, but it feels a bit clumsy, and since my podcast website is powered by my podcast host, I’d be taking up a lot of screen real-estate by more-than-doubling my podcast title length (this isn’t a problem if you run your own website).

As John Dinkel spotted in January 2020, Parcast has an SEO strategy when it comes to titling their podcasts that might be worth considering.

The best podcast players do one thing really well: play podcasts. I think we as podcasters assume that the burden of responsibility for “discoverability 🤮” is on their shoulders, but that’s not what podcast apps are for… they’re for playing podcasts.

GoodPods is an app for discoverability more than it is a player, so I’d love to see them do more in search.

App developers have an opportunity to improve the space, by implementing more than the rudimentary full-text search their database allows. MongoDB is a flexible database which is great for storing episodes alongside series, and its full-text index is pretty good. Then of course there are search-first database engines like Elasticsearch and Solr, and super-simple ones like Whoosh and Xapian. A judicious mix of indexing and weighting – where the developer decides which fields are more likely to be of importance to the user – and perhaps a bit of sugar added to the final score for each result – like the number of people in your app subscribed to the feed, how frequently it puts out episodes, how recently it has been updated, or how long it’s been around for – can all contribute to a healthy in-app search.

In time, this may get easier with transcripts. We haven’t tested full transcripts, but that might be an experiment worth running at some point. Here I’m not necessarily thinking about adding data into your transcript that a search engine will like, but instead thinking longer-term about how podcast marketplaces will use their own in-house transcripts to boost search, which is something Castbox, and sometimes Apple Podcasts, already does. Spotify has also said that they’ll be using transcripts to boost their search in future.

I was in the web development game long before I got paid to make podcasts, and SEO was a topic that came up a lot, as you might imagine. The fundamental truths haven’t changed in the last 15 years or so: search engines are there to help people find things, not to help customers find businesses (or in our case, podcasts to find listeners). Our job is to make the best possible podcast we can, and to use metadata to describe it in a way that is helpful to humans. Like fashion, the rules of good and bad SEO change with the seasons, and trying to keep on top of them at all times is tiring and ultimately only ever leads to short-term wins.

A great way to get new listeners to a single episode is to stuff your podcast name and episode title with keywords. But it’s not how you keep them, and it’s not how you build trust with them.

The object is not to find new listeners through these apps (unless you’re paying for ads within them). Instead, make your podcast easy to find by name, so that when people read your insightful tweet, your on-point newsletter submission, your helpful blog post or your conference talk slide, they know exactly what to search for, whichever app they use.

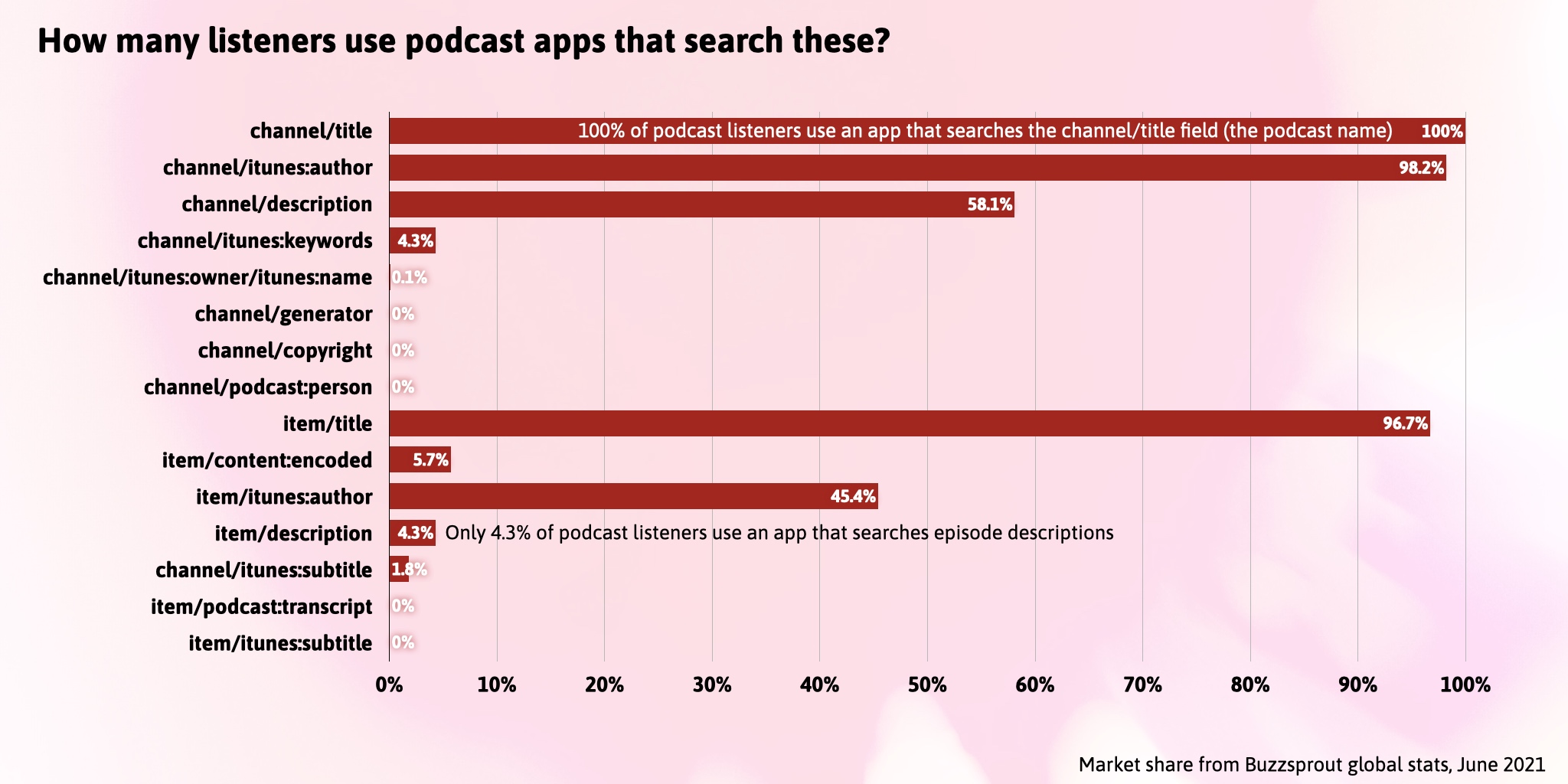

James Cridland adds: As an afterthought, it’s worthwhile looking at the market share of the apps that were tested. I took that data from Buzzsprout’s global stats figures from June, and overlaid them on the results to help us know where to put our energy as podcasters.

Only 4.3% of podcast listeners use an app that searches episode descriptions (or, more accurately, only 4.3% of downloads from podcast apps come from those that support searching through episode descriptions). Another reason, perhaps, to focus a little more on episode titles?

Download the CSVs: trackable words · apps and indexes · podcasts

This page contains automated links to Apple Podcasts. We may receive a commission for any purchases made.

Readers and supporters