Join us in analyzing 3 case studies that show the importance of driving brand search behavior and engagement, and how to do it in months, instead of years.

Maximize your SEO efforts in 2024 with insights on Google’s SGE, algorithm updates, and expert tips to keep your site ahead.

Download this guide and learn how to optimize and manage Google Performance Max campaigns, with expert insights and actionable strategies to ensure your campaigns are effective.

Join us in analyzing 3 case studies that show the importance of driving brand search behavior and engagement, and how to do it in months, instead of years.

Join us in analyzing 3 case studies that show the importance of driving brand search behavior and engagement, and how to do it in months, instead of years.

Join us as we dive into exclusive survey data from industry-leading SEOs, digital marketers, content marketers, and more to highlight the top priorities and challenges that will shape the future of search in 2025.

Google’s John Mueller and Lizzi Sassman answered a question about Content Decay, an actual problem that has little to do with content

Google’s Lizzi Sassman and John Mueller answered a question about Content Decay, expressing confusion over the phrase because they’d never heard of it. Turns out there’s a good reason: Content Decay is a just a new name created to make an old problem look like a new one.

Google tech writer Lizzi Sassman began a Google Search Off The Record podcast by stating that they are talking about Content Decay because someone submitted that topic and then remarked that she had never heard of Content Decay.

She said:

“…I saw this come up, I think, in your feedback form for topics for Search Off the Record podcast that someone thought that we should talk about content decay, and I did not know what that was, and so I thought I should look into it, and then maybe we could talk about it.”

Google’s John Mueller responded:

“Well, it’s good that someone knows what it is. …When I looked at it, it sounded like this was a known term, and I felt inadequate when I realized I had no idea what it actually meant, and I had to interpret what it probably means from the name.”

Then Lizzi pointed out that the name Content Decay sounds like it’s referring to something that’s wrong with the content:

“Like it sounds a little bit negative. A bit negative, yeah. Like, yeah. Like something’s probably wrong with the content. Probably it’s rotting or something has happened to it over time.”

It’s not just Googlers who don’t know what the term Content Decay means, experienced SEOs with over 25 years of experience had never heard of it either, including myself. I reached out to several experienced SEOs and nobody had heard of the term Content Decay.

Like Lizzi, anyone who hears the term Content Decay will reasonably assume that this name refers to something that’s wrong with the content. But that is incorrect. As Lizzi and John Mueller figured out, content decay is not really about content, it’s just a name that someone gave to a natural phenomenon that’s been happening for thousands of years.

If you feel out of the loop because you too have never heard of Content Decay, don’t. Content Decay is one of those inept labels someone coined to put a fresh name on a problem that is so old it predates not just the Internet but the invention of writing itself.

What people mean when they talk about Content Decay is a slow drop in search traffic. But a slow drop in traffic is not a definition, it’s just a symptom of the actual problem which is declining user interest. Declining user interest in a topic, product, service or virtually any entity is something that that is normal and expected that can sneak up affect organic search trends, even for evergreen topics. Content Decay is an inept name for an actual SEO issue to deal with. Just don’t call it Content Decay.

Dwindling interest is a longstanding phenomenon that is older than the Internet. Fashion, musical styles and topics come and go in the physical and the Internet planes.

A classic example of dwindling interest is how search queries for digital cameras collapsed after the introduction of the iPhone because most people no longer needed a separate camera device.

Similarly, the problem with dwindling traffic is not necessarily the content. It’s search trends. If search trends are the reason for declining traffic then that’s probably declining user interest and the problem to solve is figuring out why interest in a topic is changing.

Typical reasons for declining user interest:

When diagnosing a drop in traffic always keep an open mind to all possibilities because sometimes there’s nothing wrong with the content or the SEO. The problem is with user interest, trends and other factors that have nothing to do with the content itself.

The problem with inept SEO catch-all phrases is that because they do not describe anything specific the meaning of the catch-all phrase tends to morph and pretty much the catch-all begins describing things beyond what it initially ineptly described.

Here are other reasons for why traffic could decline (both slow and precipitously):

Content Decay is one of many SEO labels put on problems or strategies in order to make old problems and methods appear to be new. Too often those labels are inept and cause confusion because they don’t describe the problem.

Putting a name to the cause of the problem is a good practice. So rather than use fake names like Content Decay maybe make a conscious effort to use the actual name of what the problem or solution is. In the case of Content Decay it’s best to identify the problem (declining user interest) and refer to the problem by that name.

Online content tends to become outdated or irrelevant over time. This can happen due to industry changes, shifts in user interests, or simply the passing of time.

In the context of SEO, outdated content impacts how useful and accurate the information is for users, which can negatively affect website traffic and search rankings.

To maintain a website’s credibility and performance in search results, SEO professionals need to identify and update or repurpose content that has become outdated.

Not all old content needs to be deleted. It depends on what kind of content it is and why it was created. Content that shows past events, product changes, or uses outdated terms can be kept for historical accuracy.

Old content provides context and shows how a brand or industry has evolved over time. It’s important to consider value before removing, updating, or keeping old content.

Website owners and SEO professionals should take the following steps to avoid confusing users with outdated content:

These strategies help people understand a page’s relevance and assist them in getting the most accurate information for their needs.

Featured Image by Shutterstock/Blueastro

I have 25 years hands-on experience in SEO, evolving along with the search engines by keeping up with the latest …

Conquer your day with daily search marketing news.

Join Our Newsletter.

Get your daily dose of search know-how.

In a world ruled by algorithms, SEJ brings timely, relevant information for SEOs, marketers, and entrepreneurs to optimize and grow their businesses — and careers.

Copyright © 2024 Search Engine Journal. All rights reserved. Published by Alpha Brand Media.

Soundtrap for Storytellers, Podcast Creation Tool Now Launches Transcription Service in French, German, Spanish, Brazilian Portuguese, Portuguese, and Swedish – Business Wire

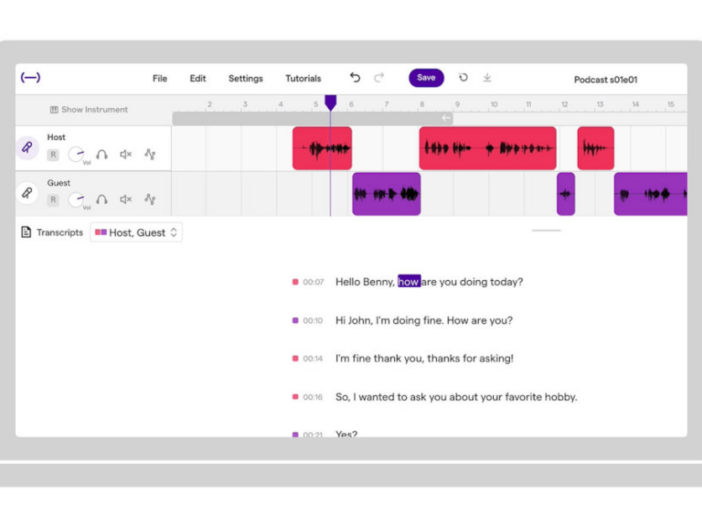

Soundtrap for Storytellers (Graphic: Business Wire)

Soundtrap for Storytellers (Graphic: Business Wire)

STOCKHOLM & NEW YORK–(BUSINESS WIRE)–Soundtrap, a part of Spotify, today announced that its Soundtrap for Storytellers transcription service will now be available in French, Spanish, German, Brazilian Portuguese, Portuguese and Swedish, enabling millions of users worldwide to create quality podcasts with this easy-to-use solution.

Launched in May this year, Soundtrap for Storytellers is a comprehensive podcast creation solution that offers all of the following features in the same service: recording, remote multi-track interviewing with video chat, smart transcribing and editing of the spoken-word audio file as a text document, full audio production capabilities, the publishing of its transcript to optimize SEO, and the publishing of the podcast to Spotify.

The interactive transcript and editing studio has proven to be extremely popular – it saves hours of time by enabling users to transcribe the spoken word live and edit the audio file as they would in a text document, eliminating the need to type out text or listen to the podcast over and over again. Initially only available in English, now anyone who speaks French, Spanish, German, Brazilian Portuguese, Portuguese or Swedish will use this transcribing solution in their mother tongue.

Equipped with a wide range of intuitive features, Soundtrap for Storytellers allows podcasters to focus on the art of storytelling by significantly reducing the time and investment typically needed to make podcasts sound professional. The full suite of services can be accessed via desktop, and a select set of recording and editing features is available on iOS and Android. Additional tools:

Collaborate and Remotely Interview Guests: Soundtrap for Storytellers enables podcasters to seamlessly collaborate with others from anywhere around the world. The platform is cloud-based and allows multiple people to talk, record (on separate tracks) and work on the same podcast by sending a link to join the session remotely.

Increase Discoverability: Publish your podcast transcript to increase SEO, drive traffic to your podcast and gain new fans.

Sound Effects: Create your own jingle and complete your production with sound design using Soundtrap’s built-in instruments and loops, and access an enormous sound library without having to leave the studio.

Storytellers was developed specifically for podcasters looking to create professional-sounding podcasts from a single platform. There’s a library of built-in musical loops and sound effects to access, or users can easily drag and drop any audio directly into the Soundtrap window. Another of its built-in tools lets users manage their podcast episodes and publish them directly to Spotify.

“We built Soundtrap for Storytellers around solving some of the biggest pain points for podcasters, with an aim of providing a streamlined platform that addresses every element of the podcast creation process. Adding six more languages to the platform’s capability gives millions of new users the chance to use the smart transcript feature in their own language,” said Per Emanuelsson, managing director of Soundtrap at Spotify.

Pricing for Soundtrap for Storytellers, begins at $14.99/month and an annual plan is available starting at $11.99/month. French, Spanish, German and Swedish are available immediately. The transcription service in Brazilian Portuguese and Portuguese will be available in December.

Resources

Press Kit: https://press.soundtrap.com/presskits

Photo: https://press.soundtrap.com/media/55527/laptop-transcriptjpg

Video about transcript: https://press.soundtrap.com/media/77294/soundtrap-for-storytellers-chapter-3-interactive-transcript

Storytellers video: https://press.soundtrap.com/media/77288/soundtrap-for-storytellers

ABOUT SOUNDTRAP: Soundtrap is the first cloud-based audio recording platform to work across all operating systems, enabling users to co-create music and podcasts anywhere in the world. The company is headquartered in Stockholm, Sweden. Soundtrap provides an easy-to-use music and audio creation platform for all levels of musical interest and abilities and is being used by the K-12 through higher-education markets. On December 2017, Soundtrap was acquired by Spotify. For more information, visit https://www.soundtrap.com/.

Ida Ståhlnacke

idas@spotify.com

+46 72 249 98 11

Soundtrap for Storytellers transcription service will now be available in French, Spanish, German, Brazilian Portuguese, Portuguese and Swedish

Ida Ståhlnacke

idas@spotify.com

+46 72 249 98 11

How to Transcribe a Podcast Fast (Step-by-Step Guide) – Castos

Podcast hosting and distribution

Secure access to private subscribers

Concierge private podcasting experience

Stay connected with your team through podcasting

Seriously Simple Podcasting Plugin

White glove assistance for your podcasts

Get a free website as unique as your podcast

Spend time creating your podcast not transcribing it

Automatically place highly relevant ads in all your episodes

Accept payments directly from your listeners

Reach your audience on the largest search engine

Grow your Audience with the right analytics

Done For You Production Of Every Episode

Launch Your Podcast The Right Way

Check Out A Few Of The Shows We Work With

Podcast Promotion

9 min read

Last updated

If you’re spending the time and energy creating podcast episodes, it’s important to make your content as accessible and discoverable as possible. You can add a ton of value to each episode with a transcript. Here’s how to transcribe a podcast.

A podcast transcript is a word-for-word account of an episode’s podcast content. You or an automated software simply listen to your podcast audio file and write down every word spoken by you and/or your guests.

Some of the big podcast directories now automatically produce fairly accurate transcripts on their platforms. Apple Podcasts is one of the most recent platforms to produce transcripts for you, but it’s only available to users on that app.

You can also include your podcast transcript within your RSS feed. Some platforms will display this content on their platform for those listeners.

Finally, you can post your transcript on your own podcast website. It’s best to include a transcription for each episode on the same page as your audio player and show notes.

Why should you include a transcript alongside your audio files? Let’s look at the benefits of podcast transcriptions.

According to the World Health Organization, 5% of people struggle with some kind of disabling hearing impairment. Podcasting is an audio-first medium, but that doesn’t mean you can’t make your content available to people with hearing impairments.

An audio transcription gives people with hearing loss a way to enjoy your amazing content. They might use it to supplement the parts they can’t hear or they might read it through entirely.

Podcast episode transcriptions also make your show accessible to non-native speakers. You can help them out by putting that content in written form for them to look up.

Your show notes are valuable ways to help Google and other search engines understand what your episodes are about, but notes only create a few hundred words. That’s hardly the type of long form content Google likes to show at the top of its results.

A podcast transcript, however, can reach 6,000 words from a 30 minute episode. That’s a ton of content that creates a massive search engine optimization boost for your website, which ultimately makes it easier for new listeners to find your content via search engines

Most content creators avoid linking to podcast episodes without a podcast transcript because there’s nothing for their readers/followers to explore without listening to an entire episode.

However, content creators don’t mind linking to podcast episodes with podcast transcripts because their readers/followers have something to explore without committing to a full episode or following a lot of steps. So if you want more backlinks (great for SEO!), publish transcriptions.

A podcast transcript is a valuable bank of written copy you can use to create other forms of content. You might use it for email marketing campaigns, social media posts, or infographics. Many podcasters use their transcriptions as sources for blog articles.

Action

Read to start your own podcast? Learn the nitty-gritty details of starting your own show in our comprehensive guide. Learn how to start a podcast.

In order to make smart decisions, you need access to the best information. Building an audience and growing your show require clear data.

Instead of reviewing your data across multiple platforms, Castos puts everything in one simple place. It’s now easier than ever before to access a full snapshot of an episode in one look.

Castos Analytics offers a plethora of statistics about your podcasts that will help you to learn more about your audience.

Our Essentials and Growth plans offer listener analytics. Our Pro and Premium plans offer advanced analytics. See our pricing.

Understanding your podcasts’ performance is essential, which is why accurate analytics are so important. Join Castos to learn as much as you can about your listener. Every insight and nugget of wisdom will help you grow your show.

Transcribing a podcast can take anywhere from 4 to 10 times the length of the audio, depending on several factors:

Manual Transcription: For someone transcribing manually, it usually takes 4-6 hours to transcribe one hour of your audio track. This depends on the speaker’s clarity, background noise, and the complexity of the content. If the content is highly technical and the transcriber is unfamiliar with it, a human transcription could take even longer.

Automated Transcription Tools: AI-based transcription tools can produce accurate transcripts in a fraction of the time, but these tools often come with a cost.

The cost of podcast transcripts varies based on the method you choose. It also depends on the quality and speed you’re looking for.

Now that you understand the benefits of a podcast transcript, you’ll need to understand the transcription process.

Writing your own podcast transcription is pretty straightforward: Just listen to your audio recording and write what you and your guests say. Avoid transcribing the “ums,” “uhs,” “likes,” and other filler words. There’s no need for that in a transcript.

Transcription accuracy is high with this method, but it costs a lot of time. A 30-minute episode could take two to three hours to transcribe with pausing and rewinding. After transcribing a few episodes, most podcasters decide that their time is better spent producing content.

You can speed up the process of creating your own podcast transcript with a voice-to-text app that automatically types whatever you speak. Let it run while you record your episode and it will capture the audio and record it as text. You’ll still need to clean up the app’s output, however.

If you want your episodes transcribed by a human, but don’t want to do it yourself, your only option is to pay someone to do it. This is expensive. The cheapest services cost about $0.50/minute of audio. If you need a native speaker (and you do), that price can be higher.

The easiest way to add a transcription to each podcast is to let podcast transcription software do it automatically.

If you use Castos as your podcast host, our transcription service will automatically transcribe a full, word-for-word account of each episode with high transcription accuracy.

We’ve teamed up with the industry leading text-to-voice technology provider to offer an entirely seamless transcription experience that is available to all of our Castos hosting customers. If you’ve already published episodes with Castos, you can even go back and transcribe previous episodes.

If you don’t use Castos to host your podcast, you can use an automated transcription service like Rev or Descript.

Need inspiration? Here are some examples of shows who include podcast episode transcriptions in aesthetically pleasing and user friendly ways.

This American Life keeps their pages neat by adding a link to the transcript at the top of the page.

Clicking that link takes you to a new page with the full transcript.

This method doesn’t concentrate SEO value on one page, but it’s an easy experience for the user. There’s a link from the transcript back to the episode anyway, so if a Google search brings you to the transcript, there’s an easy path to listen.

Freakonomics takes a pretty standard approach. They publish their podcast episode transcriptions right beneath their introduction paragraph. They put the whole piece in quotes so you know you can identify the transcript at a glance.

Masters of Scale takes their podcast pages very seriously. Each page is well designed with lots of information, including custom photography from the interview. The last element on the page is this nicely formatted transcript.

This is another podcast with a button to read the transcript. It’s right under the title, so visitors can’t miss it.

However, unlike This American Life, StoryCorps keeps all the content on the same page in a popup overlay. This concentrates the SEO value while also keeping things organized.

You have a few options when it comes to making your podcast episode transcriptions available to your readers. Choose the option that’s right for your show and your listeners.

On each episode’s page. (Recommended method.) Post each transcription directly beneath your show notes and audio player on a blog page. This way everything relating to that episode is in one easy to find place and Google knows what the page is about.

Podcast transcript collection page. A transcript collection page is simply a page on your website that’s dedicated to podcast episode transcripts. If you don’t have many episodes, or if your episodes are short, you can post all of your transcripts on the same page. Otherwise, a collection page would contain a list of links to each transcript.

Downloadable transcripts. Another method is to make the transcript downloadable. Upload the document to your website and create a link to that file on the episode’s page. The downside, however, is that you lose all of the benefits we listed above. Downloadable documents aren’t accessible, don’t help SEO, and people don’t link to them.

Every episode you publish on the Castos platform can be automatically transcribed into a full, word-for-word account of your episode (with remarkably high transcription accuracy).

We’ve teamed up with the industry leading text-to-voice technology provider, Rev, to offer an entirely seamless transcription experience that is available to all of our Castos hosting customers.

All you need to do is head over to your Castos dashboard. Choose the podcast you’d like transcriptions for (if you have multiple podcasts). In the AI Assistant section, turn on to the automatic transcription feature. You’ll need to enable this feature for each podcast you host with Castos.

From that point forward, all of your podcast episodes will receive a searchable transcript within that episode details area. You can use this transcript editor to clean up the content or make any changes you see fit.

From there you can copy/paste the transcription into your website if you’d like, or export a PDF copy of the transcription to offer as a download for your listeners.

This method is far faster than human transcribers and just as reliable. And best yet, it’s useful for all podcasters, even if you have hours and hours of audio.

If you’ve already been publishing episodes with us at Castos for some time you can even go back and transcribe previous episodes.

Click into a podcast to find the list of episodes. Click on the episode that needs a transcript.

In the details editor, click the Generate Transcript button. This will create a transcript for this episode only. Do the same for every episode that needs a transcript.

Now that you know how to transcribe a podcast, we recommend adding one to every episode. It’s free SEO and accessibility value for content you’ve already produced!

Do you offer plans to transcribe per podcast as opposed to per minute?

Currently I pay $5 per podcast on Podcast Transcribe website. Do you guys have something like that?

Resources

Ever feel like your B2B podcast is the best-kept secret in your industry? You’re not alone. But we’ve got you covered – with LinkedIn Ads. Your content is well-researched and …

Matt Hayman

6 min read

Last week, Apple announced that all Apple Podcasts content would be available directly on your browser. That’s great news for all of us content creators because instead of having to …

Craig Hewitt

3 min read

If you’re looking to expose your show to new audiences, podcast swaps are for you. This article explains everything you need to know.

Dennis

6 min read

Castos runs entirely on renewable energy.

Less brand safety, more SEO spend in 2025, Forrester predicts – Marketing Brew

Top 5 SEO Companies in India – 24-7 Press Release

MOHALI, INDIA, November 08, 2024 /24-7PressRelease/ — Every business needs SEO. There’s no doubt about it. No matter the size or business goals of your venture, you need visibility, ranking, and, ultimately, lead conversions.

To sustain and embrace business growth, you need an effective SEO strategy that drives you to viable results. There are dozens of marketing strategies, both digital and traditional, but in the long run, SEO will be your best call.

So, why choose only SEO companies over other paid marketing strategies?

Here’s your answer below!

Why Choose an SEO Company for Your Business?

Outsourcing your SEO needs can be advantageous to your business. Choosing the best SEO company is crucial, as businesses appearing on the first page of Google are more likely to be trusted by users.

SEO companies come up with strategies that affect your rankings, visibility, and overall digital performance.

Long-term SEO strategies can:

● Build trust and credibility

● Give a competitive edge

● Promote long-term growth

● Fetch quality leads

● Boost profitability

● Improve customer experience

Outsourcing your SEO needs to the best SEO companies in India can increase your revenue without overstretching the in-house resources. Their expertise and client reviews are testimonies of their excellent services over the years.

Below is the list of the Top 5 SEO Companies in India that have helped businesses with full-proof strategies for their market growth.

SEO Experts Company India has built a solid reputation for its performance-driven approach, ensuring clients’ websites rank highly on search engines. Known for customizing strategies to fit each client’s business goals, the firm specializes in bespoke on-page and off-page SEO, link building, PPC management, and Content Creation & optimization. Their industry experts use cutting-edge tools and deep analytics to focus on boosting organic traffic and driving conversion rates.

Their work with clients in both domestic and international markets speaks impeccably about their broad expertise.

Why Choose Them?

● Strong focus on driving business growth using SEO methodologies

● Tailored strategies that align with client objectives

● Specialize in both local and global SEO tactics

2. SAG-IPL

SAG-IPL is a unique agency that offers extended digital marketing solutions, specifically focusing on SEO. It is the perfect blend of the old and new schools of SEO work, including voice search optimization and artificial intelligence keyword research. Specializing in technical SEO audits, link building, and content marketing services, SAG-IPL is all about providing solutions that work now and in the future.

Most of them explain to their clients how their SEO campaigns are being handled, which keeps businesses engaged.

Why Choose Them?

● Focus on innovative SEO trends such as voice search

● Dedicated account managers for clear communication

● Extensive portfolio with diverse industries

3. Bruce Clay India

Bruce Clay India is an extension of the global SEO powerhouse Bruce Clay, Inc., which has shaped SEO practices for numerous businesses. Their Indian division brings world-class SEO methodologies and a local understanding of the market. Bruce Clay India offers a holistic approach to optimizing websites, from comprehensive site audits to advanced SEO training.

Their commitment to ethical SEO practices ensures that clients’ rankings are improved and sustained over the long term.

Why Choose Them?

● Trusted global SEO leader with a localized approach

● Expert-level SEO training for internal teams

● Ethical SEO practices that focus on sustainability

4. SEO.com

The SEO company SEO.com has been offering custom solutions to help increase businesses’ website rankings. Their branch in India has a niche specialization in Organic search, Technical SEO, and Content Marketing. SEO.com’s unique selling point is that it offers both old-school SEO coupled with new technological trends like AI SEO and machine learning.

They also aim to define goal-oriented, data-driven results to prove effectiveness to their clients.

Why Choose Them?

● Combination of traditional and AI-driven SEO

● Transparent reporting with measurable outcomes

● Excellent client retention and satisfaction rates

5. VJG Interactive

VJG Interactive brings a data-driven approach to SEO, helping businesses of all sizes achieve significant visibility across search engines. Known for its local and global SEO expertise, VJG Interactive offers a full range of services, including keyword research, link-building strategies, and mobile SEO optimization.

What makes VJG Interactive a top choice is its commitment to innovation. It always stays ahead of the latest SEO trends, such as Core Web Vitals and mobile-first indexing.

Why Choose Them?

● Data-driven and trend-focused SEO services

● Expertise in mobile SEO and Core Web Vitals

● Proven track record in both local and global SEO campaigns

Bottom Line:

Outsourcing your SEO needs assists you in attaining higher rankings and ensuring they attract value. These top 5 SEO companies in India bring experience, creativity, and practical approaches to performance in a dynamic online market.

Selecting the right SEO company is important for long-run practicality.

Whether you are a small business or a big company, these SEO companies have the expertise to make your business the leader in search engine results and grow in the long- run.

# # #

Send your news using our proprietary press release distribution software platform. A leading press release service provider since 2004.

© 2004-2024 24-7 Press Release Newswire. All Rights Reserved.